Nuclear data activities for medium mass and heavy nuclei at Los Alamos

LA-UR-22-27140

Matthew Mumpower

Nuclear Data (2022)

Tuesday July 26$^{th}$ 2022

Theoretical Division

Los Alamos National Laboratory Caveat

The submitted materials have been authored by an employee or employees of Triad National Security, LLC (Triad) under contract with the U.S. Department of Energy/National Nuclear Security Administration (DOE/NNSA).

Accordingly, the U.S. Government retains an irrevocable, nonexclusive, royaltyfree license to publish, translate, reproduce, use, or dispose of the published form of the work and to authorize others to do the same for U.S. Government purposes.

Nuclear Data is Ubiquitous in modern applications

Example: fission yields are needed for a variety of applications and cutting-edge science

Industrial applications: simulation of reactors, fuel cycles, waste management

Experiments: backgrounds, isotope production with radioactive ion beams (fragmentation)

Science applications: nucleosynthesis, light curve observations

Other Applications: national security, nonproliferation, nuclear forensics

Modern applications

Modern applications require: a blending of data and theory (models)

Data is often times sufficiently precise, but hard to obtain and there's never enough

Theory provides great physical insight and extrapolations, but limited by assumptions and lack of precision

How do we go about merging information produced by both?

A solution: the NEXUS framework

We have built a Python3 platform to handle this complex task which provides:

access to nuclear data (experiment, evaluation, etc.) and metadata

access to theoretical models (CoH, BeOH, eFRLDM, DRW, etc.)

seamless integration between data, codes and applications (marshalling); straightforward data release

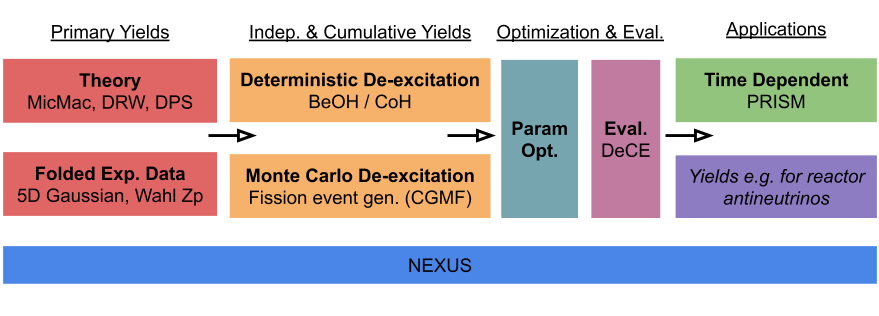

Example evaluation workflow: fission yields

Our current workflow combines many distinct codes and data

NEXUS provides

code structures and marshalling that allow theory, data and applications to seemlessly communicate

Cross section evaluation

We have more recently used NEXUS for the evaluation of $^{239}$Pu neutron-induced cross sections (fast energy range)

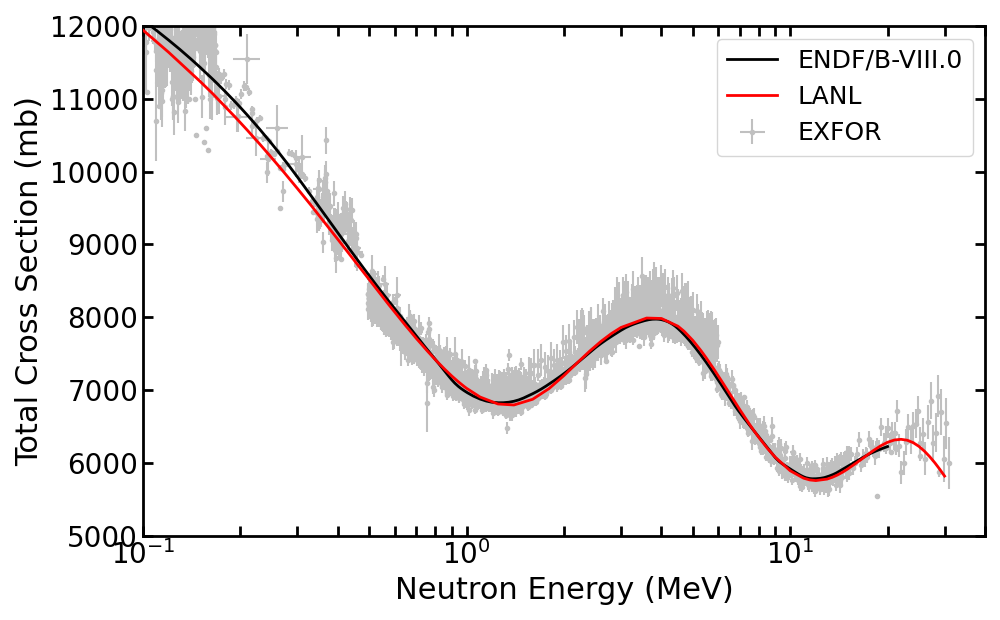

Total neutron-induced cross section

Modeled with CoH (Kawano) using 15 experimental datasets

Soukhovitskii (2005) optical model [$\beta \sim 0.21$]; coupled channels with 7 levels

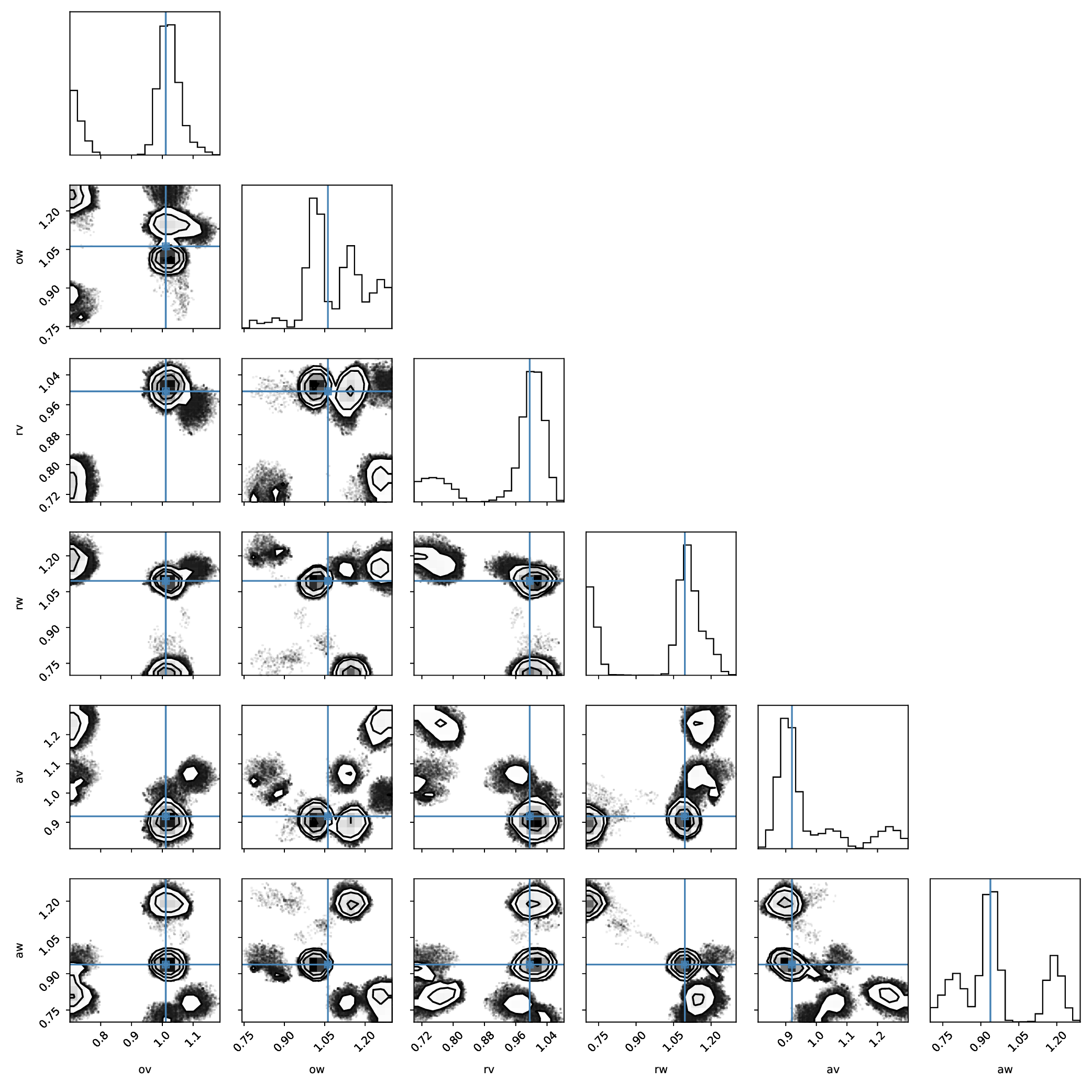

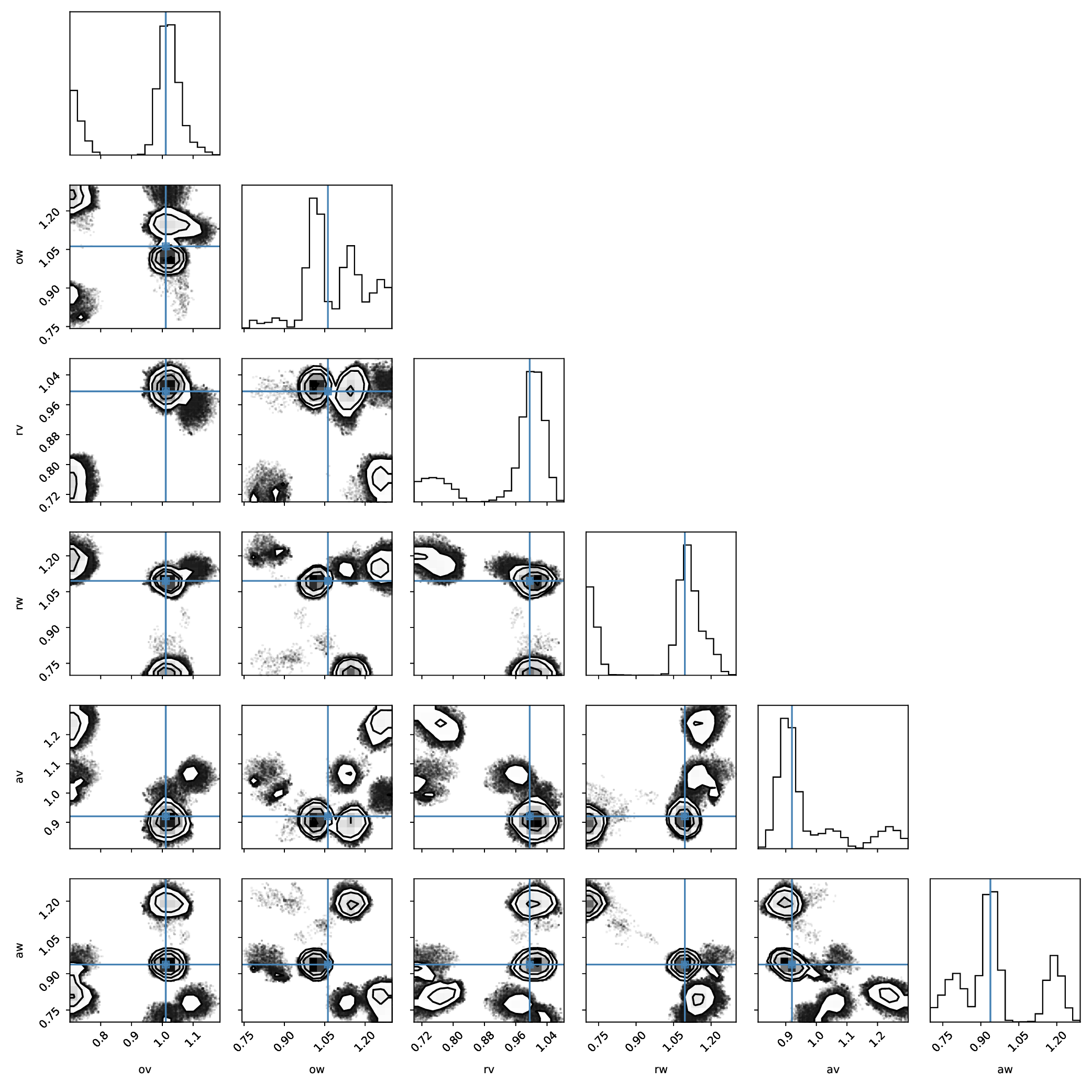

Bayesian optimization module

Hyperparameter optimization of optical model parameters (potential depths, radii and diffuseness)

We find excellent performance of algorithm on par with past ENDF evaluation efforts

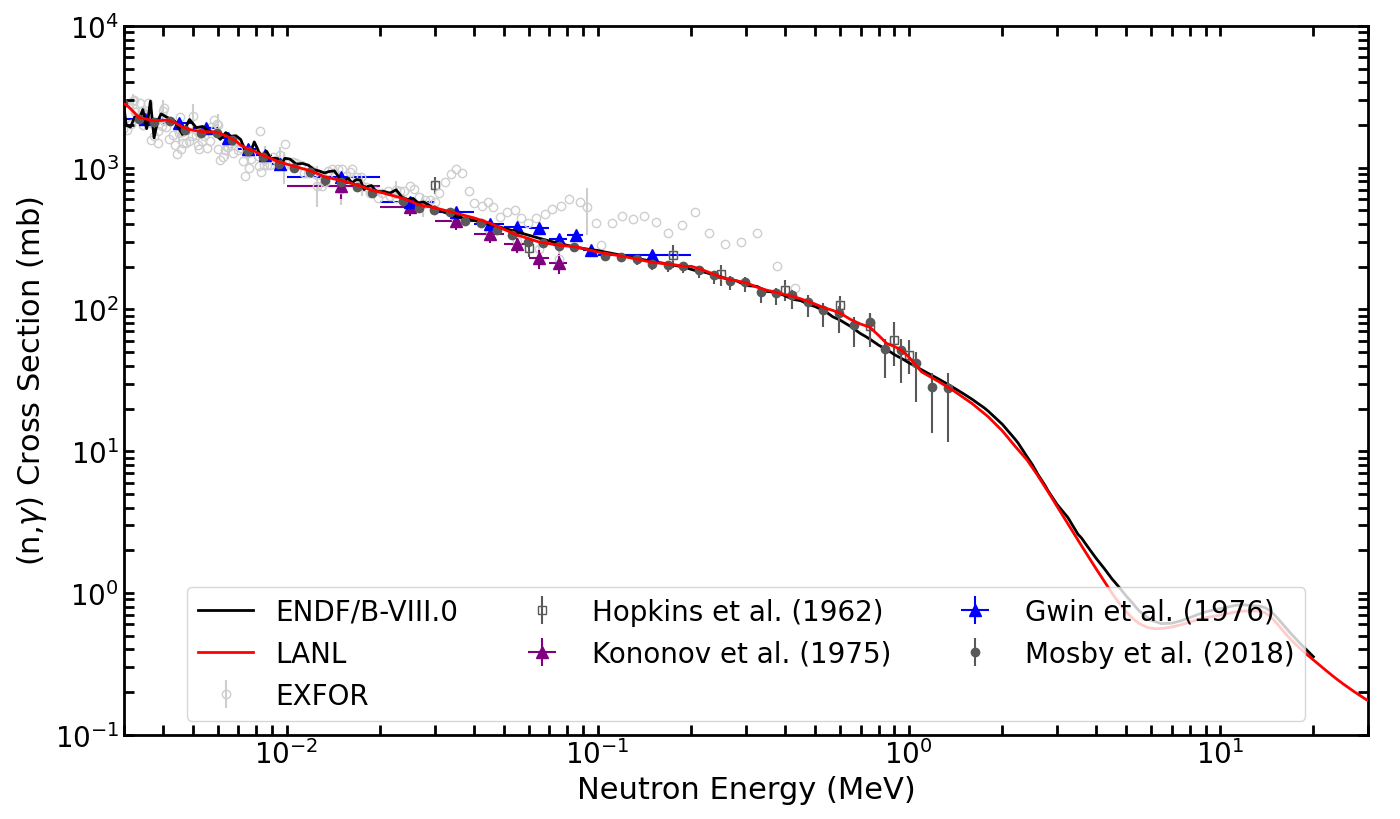

$^{239}$Pu (n,$\gamma$) cross section

Rapid incorporation of new high-precision data, e.g. Mosby et al. (2018)

As well as new model developments (M1 enhancement)

We can also integrate feedback from integral benchmarks when appropriate

$^{239}$Pu (n,2n) cross section

Model: new collective enhancement allows for simultaneous description of (n,f) and (n,2n) channels

[difficult to describe in past versions of CoH]

Tight integration of model and experimental data leads to the updates relative to ENDF/B-VIII.0

Summary of $^{239}$Pu updates

We are in the process of updating the $^{239}$Pu cross sections in the fast energy range

(complementing the work of IAEA / INDEN / ORNL lower energy work in the resonance range)

LANL has been overhauling its evaluation tools (CoH, CGMF, DeCE, Kalman, NEXUS, PySOK, SOK)

Focus on consistency throughout evaluation (we are evaluating more isotopes)

Model update: new collective enhancement allows for simultaneous description of (n,f) and (n,2n) channels

Model update: new inelastic scattering model using the Engelbrecht-Weidenmuller transformation

New cross section evaluation goes up to 30 MeV (as well as nu-bar and PFNS)

New (n,$\gamma$) data from S. Mosby up to ~1 MeV

Neutron Data standards $^{239}$Pu(n,f) cross section;

includes updates in covariances with templates and new (n,f) data from fissionTPC (Snyder)

New nu-bar including improved exp. UQ, Marini data and consistent CGMF modeling

New PFNS: INDEN non-model evaluation at thermal, Los Alamos model evaluation above including new Chi-Nu and CEA data

We are actively testing the file versus integral benchmarks; starting covariances

NEXUS for scientific applications

NEXUS isn't just applicable to nuclear data evaluations

We can also use it for science (hoorah!)

Nuclear data in astrophysics

First prediction of dynamical isomer population in the astrophysical $r$ process

Some "astromers" may have direct observable consequences in kilonova (e.g. exp. effort led by K. Kolos @ LLNL)

Many measurement campaigns now underway at ANL, CERN and FRIB (first round experiment!)

NEXUS provided: access to the latest data in ENDF / ENSDF as well as NuBase.

Application of fission yields to the $r$-process

First application of FRLDM yields versus standard symmetric split

Universality of the $r$-process may extend down lower than previously believed; coproduction of precious metals

Recently accepted Hubble Space Telescope time motivated from this work!

NEXUS provided: the marshalling between models and nucleosynthesis network

Kilonova impacted by nuclear data uncertainties

Lanthanide / actinide production can vary drastically using different nuclear models

This in turn impacts heating, thermalization and light curves even for fixed ejected mass & velocity

NEXUS provided: the construction of the complete nuclear datasets

New predictions for heavy nuclei

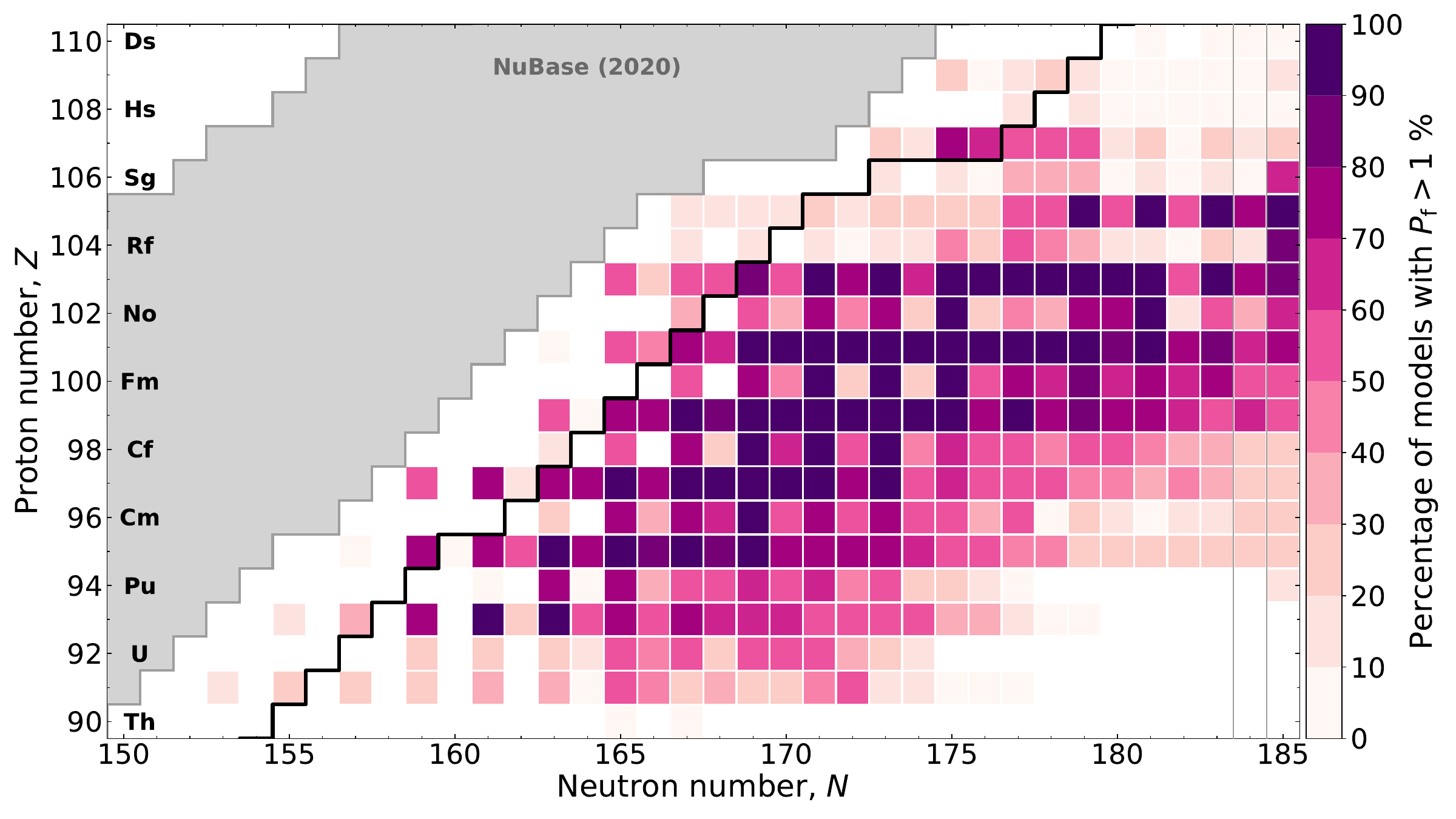

Predictions of the most likely nuclei to find significant $\beta$-delayed fission branchings

These nuclei could be accessible in the future with concerted experimental effort

NEXUS provided: the compilation of model results

Machine Learning with Nuclear Data

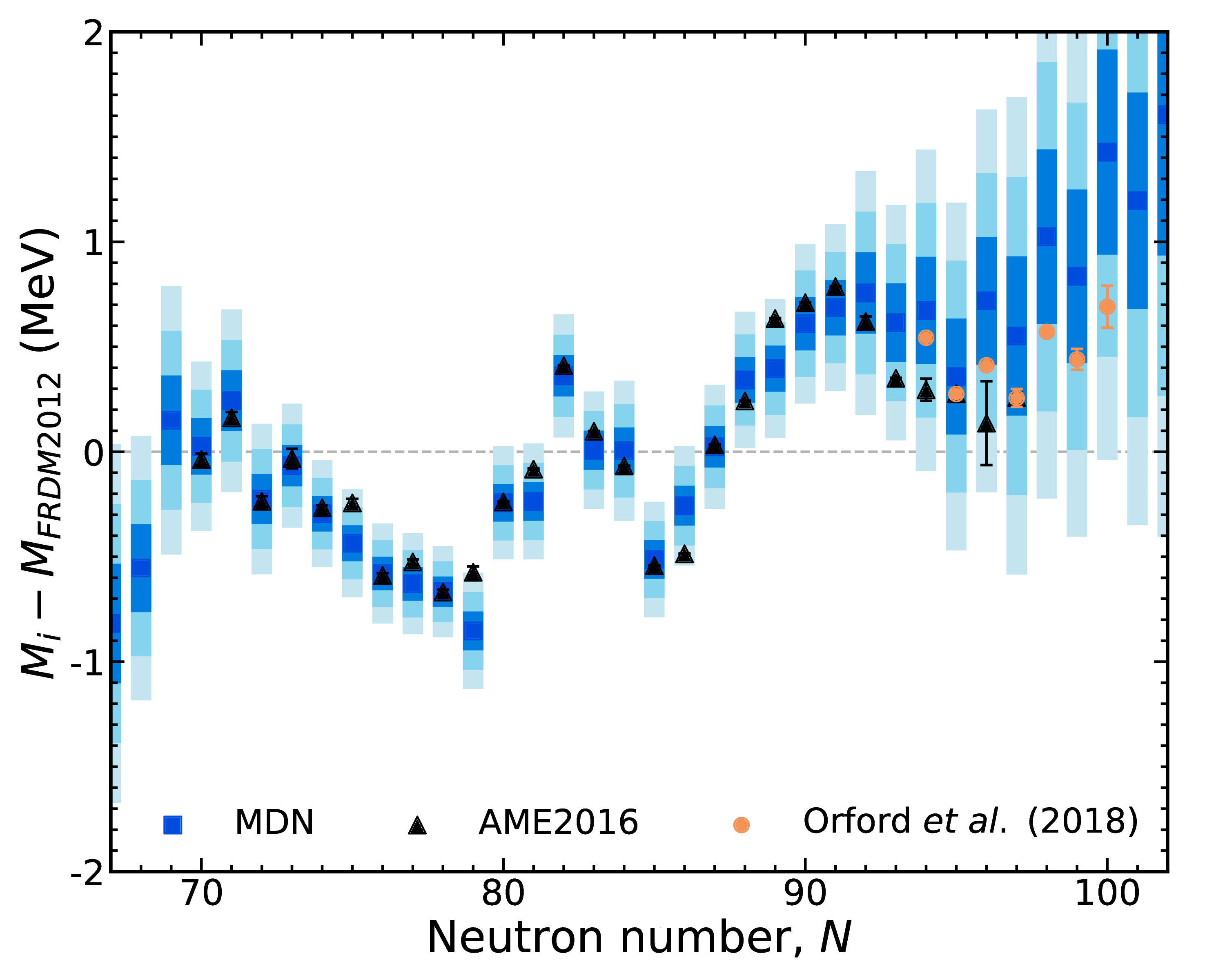

Novel method to predict ground state masses and uncertainties

Mixture Density Network constrained by both data and physics

NEXUS provided: quick access to the Atomic Mass Evaluation / theory models for training

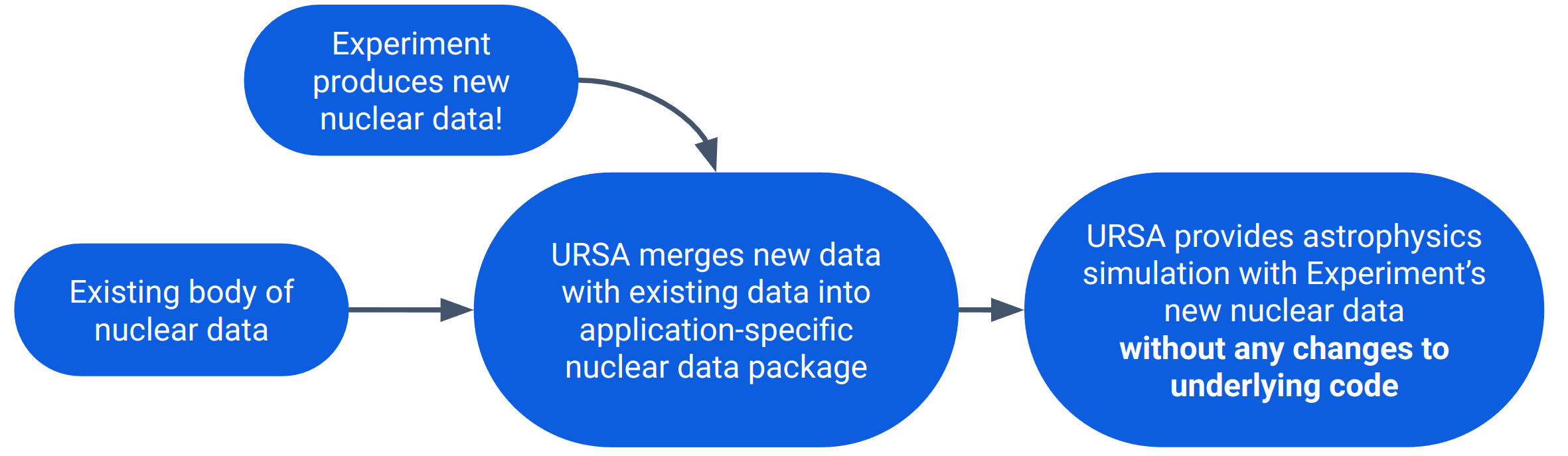

URSA: Unified Reaction Structures for Astrophysics

an effective and efficient workflow for immediately incorporating state-of-the-art nuclear data in astrophysics

This is particularly timely as FRIB (and other important experimental facilities) come online in the near future

International team led by T. Sprouse @ LANL

100% open source; technology now beginning to be used in astrophysical studies (MESA, PRISM, etc.)

Special thanks to

My collaborators

J. Barnes, M. Herman, D. Hoff, P. Jaffke, T. Kawano, N. Kleedtke, K. Kolos, A. Lovell, M. Li, K. Lund, G. C. McLaughlin, W. Misch, A. Mohan, P. Möller, D. Neudecker, J. Randrup, H. Sasaki, N. Schunck, T. Sprouse, R. Surman, N. Vassh, M. Verriere, P. Talou, Y. Zhu,

& many more...

▣ Student ▣ Postdoc ▣ LANL Staff ▣ FIRE PI

Summary

Modern applications require a seamless integration of data and modeling

NEXUS provides a framework for:

nuclear data ▴ theoretical models ▴ pipeline to applications

New evaluations can be constructed with complete history under version control (e.g. $^{239}$Pu)

Data may be rapidly incorporated into applications to accelerate the pace of scientific discovery

This work has led to a tighter collaboration between the data, modeling and experimental communities

Results / Data / Papers @ MatthewMumpower.com